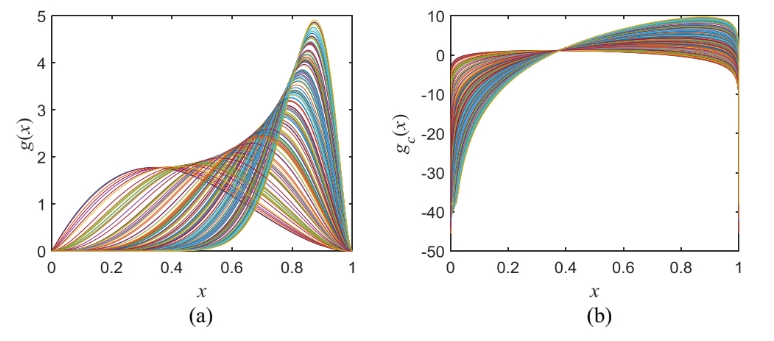

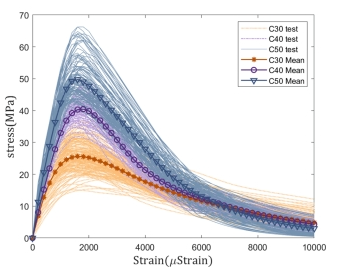

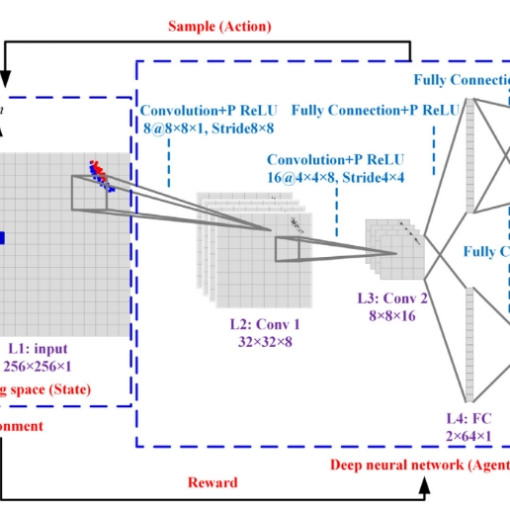

Abstract: In structural health monitoring (SHM), revealing the underlying correlations of monitoring data is of considerable significance, both theoretically and practically. In contrast to the traditional correlation analysis for numerical data, this study seeks to analyse the correlation of probability distributions of inter-sensor monitoring data. Due to induced by some commonly shared random excitations, many structural responses measured at different locations are usually correlated in distributions. Clarifying and quantifying such distributional correlations not only enables a more comprehensive understanding of the essential dependence properties of SHM data, but also has appealing application values; however, statistical methods pertinent to this topic are rare. To this end, this article proposes a novel approach using functional data analysis techniques. The monitoring data collected by each sensor are divided into time segments and later summarized by the corresponding probability density functions (PDFs). The geometric relations of the PDFs in terms of their shape mappings between sensors are first characterized by warping functions, and they are subsequently decomposed into finite functional principal components (FPCs); one FPC of the warping functions characterizes one deformation pattern in the transformation of the shapes of the PDFs from one sensor to another. Using this principle, the inter-sensor geometric correlation pat- terns of PDFs can be clarified by analysing the correlation of the FPC scores of warping functions to the PDFs from one sensor. To overcome the challenge of correlation quantification for real-valued samples (FPC scores) coupled with their functional counterparts (PDFs), a novel nonparametric functional regression (NFR)-based correlation coefficient is defined. Both simulation and real data studies are conducted to illustrate and validate the proposed method.